GO BACK

For Leti and ST, the Fastest Way to Edge AI Is Through the Memory Wall

In exclusive interviews with EE Times Europe, François Andrieu, head of advanced memory and computing at CEA-Leti, and Giuseppe Desoli, R&D director and company fellow at STMicroelectronics, talked about how their organizations are exploring ways of bringing AI to the edge.

While compute performance has scaled exponentially in recent years, memory bandwidth and access times have not kept up—a disparity known as the “memory wall.” As AI models grow in size and complexity, especially with the proliferation of generative AI, moving data between memory and processing units requires significantly more time and energy than the computation itself. For every matrix-vector multiplication that drives a neural network, vast amounts of weight data must shuttle back and forth, and this constant data movement has become a primary barrier to performance and efficiency.

In exclusive interviews with EE Times Europe, François Andrieu, head of advanced memory and computing at CEA-Leti, and Giuseppe Desoli, R&D director and company fellow at STMicroelectronics, talked about how their organizations are exploring ways of bringing AI to the edge.

The case for in-memory computing at the edge

This challenge is particularly acute at the edge, where AI is moving rapidly in the form of embedded intelligence in local devices such as smartphones, industrial sensors, autonomous vehicles, and medical equipment. Deploying AI at the edge eliminates dependence on the cloud, enabling faster response times, greater data privacy, reduced energy consumption from transmission, and autonomous operation of devices in areas with limited connectivity. But the edge comes with a new set of constraints: minimal power, low latency, small form factors, and an increased need for reliability. These requirements are forcing researchers and industry alike to rethink traditional memory architectures.

To overcome the current limitations, researchers are turning to in-memory computing (IMC)—a model that brings processing closer to where data is stored, minimizing energy-intensive data movement. For edge AI, this is especially promising. IMC enables local computation within memory arrays themselves, opening new doors to energy efficiency and performance. The requirements for such memory are stringent. It must be dense enough to hold complex model weights, fast and power-efficient to enable real-time inference, highly durable for constant use, and compatible with compact, vertically integrated hardware.

One of the most promising technologies is phase-change memory (PCM), which ST and Leti have jointly developed. According to Desoli, PCM’s combination of high density, nonvolatility, and reliability makes it especially well-suited for automotive AI applications, where robustness and zero-defect tolerance are essential. “We’ve already prototyped a hybrid architecture that combines high-density PCM with SRAM-based in-memory compute,” he said. “This pairing meets both density and performance requirements—key for emerging automotive AI use cases, especially as AI moves beyond ADAS into zonal architectures and lower-tier functions.”

But PCM is not the only IMC approach that ST is working on, and automotive is not the only application domain they’re considering. “It’s an edge AI enabler across a wide range of consumer and industrial products,” Desoli said.

From PCM to 3D integration

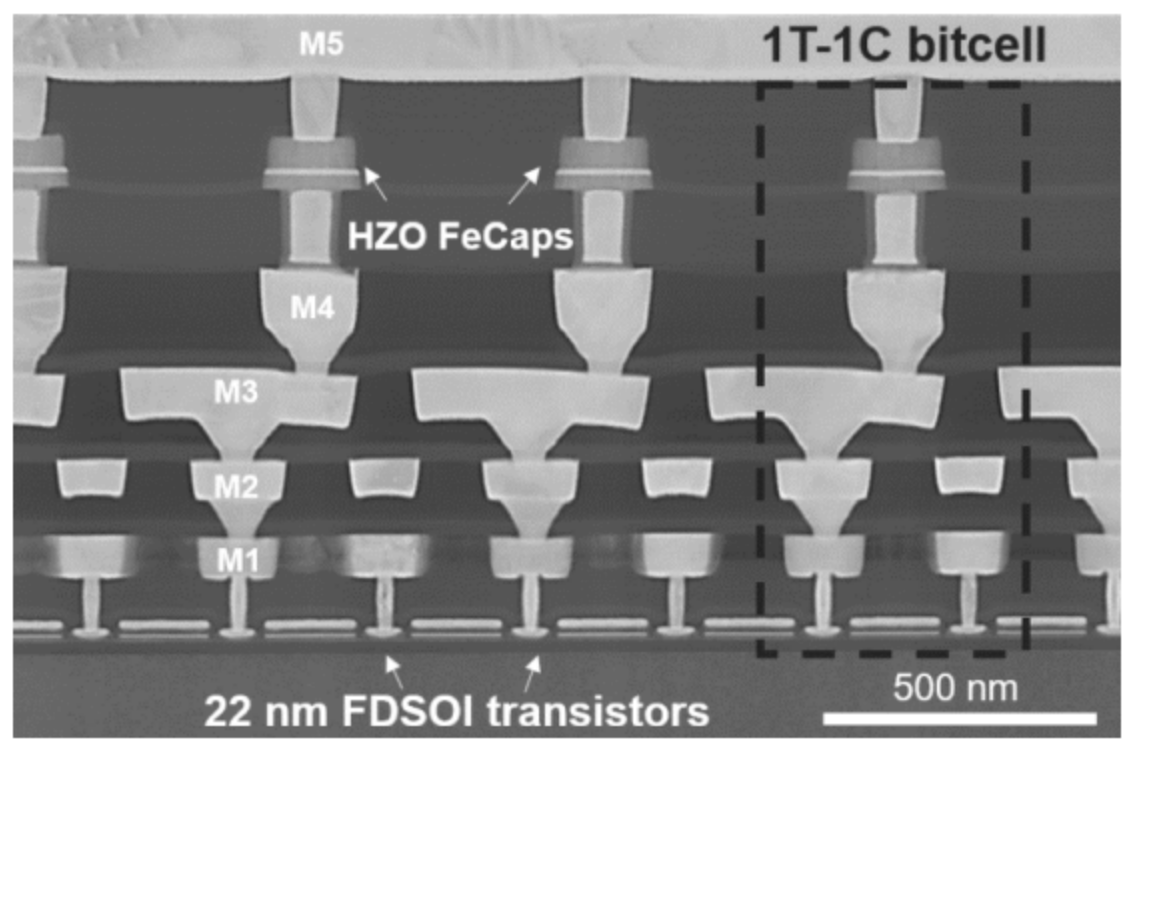

Andrieu outlined a broader ecosystem of memory innovation. In addition to PCM, Leti is also investigating a range of other approaches, including oxide-based resistive RAM (OxRAM), magnetic RAM (MRAM), and ferroelectric memories. Each type of memory has a specific set of advantages. OxRAM, for instance, is well-suited for cost-sensitive, AI-specific use cases. MRAM provides robustness in high-write environments. And ferroelectric memories offer excellent energy efficiency and endurance. “In generative AI, constant computation stresses part of the memory devices,” he said. “Ferroelectric memories offer high endurance and low power consumption, which is why we’re investing in them.”

According to Andrieu, there is no one-size-fits-all solution—different applications demand different technologies. CEA-Leti continues to pursue several approaches in parallel, often in partnership with organizations like ST and Weebit Nano.

The integration of memory and compute is not only a question of materials and device physics; it also involves system architecture, particularly 3D integration, which, according to Andrieu, is crucial for AI because it increases the memory capacity and offers the opportunity to integrate different technologies or CMOS nodes. “With dense vertical interconnects, we can bring memory and compute closer together, thereby increasing bandwidth and reducing latency,” he said.

Leti is driving innovation in monolithic 3D integration, which builds logic and memory directly on the same wafer. By contrast, hybrid bonding is used to join separately fabricated layers. “Monolithic 3D devices achieve the highest density, but hybrid bonding—stacking chips or wafers—is another effective approach,” Andrieu said. “Both boost performance by minimizing data transfer delays.”

Desoli confirmed that ST has been using hybrid bonding in production for years, especially in imaging sensors that combine optical and digital components. Hybrid bonding offers a practical, scalable path to market for edge AI devices, especially those that incorporate sensing. While monolithic 3D stacking allows for even finer granularity and flexibility at the gate level, it introduces new challenges, particularly in design automation tools. According to Desoli, partitioning logic across vertical layers transforms a 2D design problem into a much more complex 3D one. That said, both he and Andrieu are optimistic about the long-term promise of 3D integration.

Turning research into reality

According to Desoli, thermal management is one of the thorniest challenges in 3D stacked systems. “As layers of memory and compute are packed closer together, heat can build up in the middle layers, threatening reliability and performance,” he said. To tackle this, ST is working with EDA vendors to integrate thermal simulation into the design workflow. Desoli said AI-accelerated multiphysics simulation tools help model heat dissipation efficiently, allowing designers to explore more variants in less time. Leti, meanwhile, is co-designing circuits and physical layouts to minimize hotspots and developing new materials and structures to enhance thermal dissipation.

When asked about the barriers to bringing advanced memories from lab to mass production, both experts were candid. Desoli noted that moving a memory from prototype to production can take five to 10 years, involving repeated redesigns, process refinements, and extensive reliability testing. Even mature technologies like PCM and ReRAM still face hurdles. Better modeling tools, possibly enhanced by AI, could help reduce the number of costly prototyping cycles. Andrieu added that successful integration also depends on co-design across the entire stack—from devices and circuits to algorithms and applications. Leti is pursuing this through partnerships with research institutions such as C2N, ETH Zurich, and Aix-Marseille University, as well as with industrial collaborators.

Sustainability is another key focus. As generative AI models are miniaturized and pushed to the edge, efficiency becomes paramount. Leti has demonstrated solar-powered neural networks and ultra-low-power binarized networks that use 4-bit quantization. ST is taking a similar approach, with compute blocks that support dynamic precision scaling, down to 4-, 2-, or even 1-bit operations. This kind of deep quantization is essential for maximizing energy efficiency in edge AI hardware.

The Leti-ST partnership exemplifies the broader goal of building a sovereign European AI hardware ecosystem. According to Andrieu, Europe still faces gaps in areas such as fabless design companies, advanced nodes, and domestic memory suppliers. However, strengths in automotive, analog design, and sensor interfaces provide a solid foundation. Through pilot lines and close industry collaboration, Leti and ST aim to move innovations quickly from lab to market.

The roadmap is ambitious. In the near term, both partners are working to scale their prototypes—PCM, OxRAM, ferroelectric, and gain-cell memories—into test chips and production-ready modules. These will target applications in automotive AI, distributed IoT, smart sensors, and more. Long-term plans include the development of tightly integrated, wafer-stacked systems that combine sensing, computing, and memory, laying the groundwork for a new class of edge devices.